-

Optional Ceph Backup Solutions

-

Backup Ceph Based on Snapshot

-

Import and Export of Incremental Snapshots for RBD

-

Snapshot-based Remote Disaster Recovery Solution for Ceph

-

Vinchin Backup & Recovery---Your Virtualization Guard

-

Backup Ceph FAQs

-

Conclusion

Proxmox is a powerful virtualization management platform that can efficiently manage virtual machines and containers. In order to achieve high-performance and high-availability storage, many users choose Ceph, which is seamlessly integrated with Proxmox. Ceph is a distributed storage system that provides object storage, block storage, and file system storage to ensure data security and scalability. It is an ideal storage solution in the Proxmox environment.

Optional Ceph Backup Solutions

There are various methods for backing up Ceph data, including snapshots and replication. Snapshot is a very convenient and efficient backup method that can quickly create a data image in a short time, ensuring data integrity and consistency. With snapshots, we can achieve real-time backup of data in the Ceph cluster and quickly restore data when needed. Additionally, Ceph supports remote replication of data, allowing data to be backed up to different data centers or cloud storage services, ensuring data security and reliability.

1. RBD Mirroring

This involves real-time data mirroring between two independent Ceph clusters, but requires the Ceph installation version to be Jewel or newer.

2. Snapshot-based Backup

Using Ceph RBD's snapshot technology, data is periodically backed up to the disaster recovery center through differential files. When the primary data center fails, the latest backup data can be restored from the disaster recovery center, and the corresponding virtual machines can be restarted to minimize data recovery time during a disaster. This backup can roll back the VM image to a certain snapshot state, and any data updates from the snapshot state to the time of the failure will be lost.

Backup Ceph Based on Snapshot

Ceph's snapshot technology was originally intended for RBD or Pool rollback, but administrators can use snapshots for remote image backup and disaster recovery.

Ceph Snapshot

Ceph supports two levels of snapshot functionality: Pool and RBD. Both levels of snapshots use a Copy On Write mechanism. When creating a snapshot, no copying is performed; instead, the server is instructed to retain all related disk blocks, preventing them from being overwritten. When write and delete operations occur, the original disk blocks containing the data are not modified; instead, the modified portions are written to other available disk blocks.

1)RBD

Creating a snapshot based on the current image state

# rbd create <image-name> --size 1024 -p <pool-name>

Actually librbd only creates the metadata related to the image and does not actually allocate space in Ceph.

Creating a snapshot based on the current image state

# rbd snap create <pool-name/image-name> --snap <snap-name>

Rolling back the image to the state at snapshot creation

# rbd snap rollback <pool-name/image-name> --snap <snap-name>

RBD snapshot creation in Ceph Involves the following steps:

a) Sending a request to the Ceph monitor to get the latest snapshot sequence number (“snap_seq”).

b) Saving the snap_name and snap_seq in the RBD metadata.

2)Pool

A Pool can be considered a logical partition for storing data in Ceph, similar to a namespace. A Ceph cluster can have multiple Pools, each with a certain number of PGs. Objects in the PG are mapped to different OSDs.

Different Pools can have their own settings for replica count, data scrubbing frequency, snapshot size, ownership, etc.

Creating a snapshot

# rados mksnap <snap_name> -p <pool_name> # rados lssnap -p <pool_name>

Restoring a pool to the state at snapshot creation

# rados rollback pool <pool_name> to snapshot <snap_name>

It is important to note that these two levels of snapshots are mutually exclusive. Only one can be used in a Ceph Cluster. Therefore, if an RBD object has been created in a pool (even if all image instances are currently deleted), snapshots cannot be created for that pool. Conversely, if a snapshot has been taken for a pool, RBD image snapshots cannot be created for that pool.

Import and Export of Incremental Snapshots for RBD

Additionally, Ceph also supports the import and export of incremental snapshots for RBD, as detailed below:

Exporting incremental images:

a) Export and import changes from the creation of an image up to the present:

Save incremental changes of <pool-name/image-name> since creation to <image_diff> file

# rbd export-diff <pool-name/image-name> <image_diff>

Import diff of an image from creation to a specific moment

# rbd import-diff <image_diff> <pool-name/image-name>

After executing this command, <image-name> will reflect the state of <image-diff> as it was when <image-name> was created

b) Export changes of an image from creation to a specific snapshot:

Export changes of <pool-name/image-name> from creation to <snap_name> creation to <image_diff> file

# rbd export-diff <pool-name/image-name>@<snap-name> <image_diff>

c) Export changes of an image from current state to a specific snapshot:

Save changes between current state of <pool-name/image-name> and state when <snap_name> was created to <image_diff> file

# rbd export-diff <pool-name/image-name> --from-snap <snap_name> <image_diff>

Importing incremental images:

a) Import diff from creation to a specific moment

# rbd import-diff <image_diff> <pool-name/image-name>

Snapshot-based Remote Disaster Recovery Solution for Ceph

Assume there are two data centers, master_dc and backup_dc, each with its own Ceph storage system.

Initial Backup:

1. Create an img_bak in backup_dc as the initial mirror.

# rbd create <img_bak> --size 1024 -p <pool-bak>

2. Create an image snapshot in master_dc.

# rbd snap create <pool-mas/image-mas> --snap <snap-mas>

3. Export the incremental difference between the image and the snapshot.

# rbd export-diff <pool-mas/image-mas>@<snap-mas> <image-snap-diff>

4. Transfer the exported incremental file “<image-snap-diff>” to backup_dc using scp or other methods.

5. Import the incremental snapshot file into the image in backup_dc.

# rbd import-diff <image-snap-diff> <pool-bak/image-bak>

Subsequent Backups:

Assume the latest snapshot in both master_dc and backup_dc is snap-1.

1. Create a new image snapshot in the master_dc.

# rbd snap create <pool-mas/image-mas> --snap <snap-2>

2. Export the incremental difference between snap-1 and the latest snap-2.

# rbd export-diff --from-snap <snap-1> <pool-mas/image-mas@snap-2> <image-snap1-snap2-diff>

3. Transfer the exported incremental file to backup_dc.

4. Import the incremental file into the image in backup_dc.

# rbd import-diff <image-snap1-snap2-diff> <pool-bak/image-bak>

The above steps outline the key points of a backup solution based on snapshot technology. You can use this as a foundation to write your own automated backup and recovery scripts, thereby creating a more complete and intelligent two-center backup solution.

Vinchin Backup & Recovery---Your Virtualization Guard

When building a modern IT infrastructure, many organizations choose to integrate Ceph into a virtualization platform, such as Proxmox. In the Proxmox environment, in order to ensure the stable operation of the entire IT infrastructure and data security, it is extremely important to back up and restore virtual machines.

Vinchin Backup & Recovery is a robust Proxmox VE environment protection solution, which provides advanced backup features, including automatic VM backup, agentless backup, LAN/LAN-Free backup, offsite copy, effective data reduction, cloud archive, instant restore, granular restore and etc., strictly following 3-2-1 golden backup architecture to comprehensively secure your data security and integrity.

Besides, data encryption and anti-ransomware protection offer you dual insurance to protect your Proxmox VE VM backups. You can also simply migrate data from a Proxmox host to another virtual platform and vice versa.

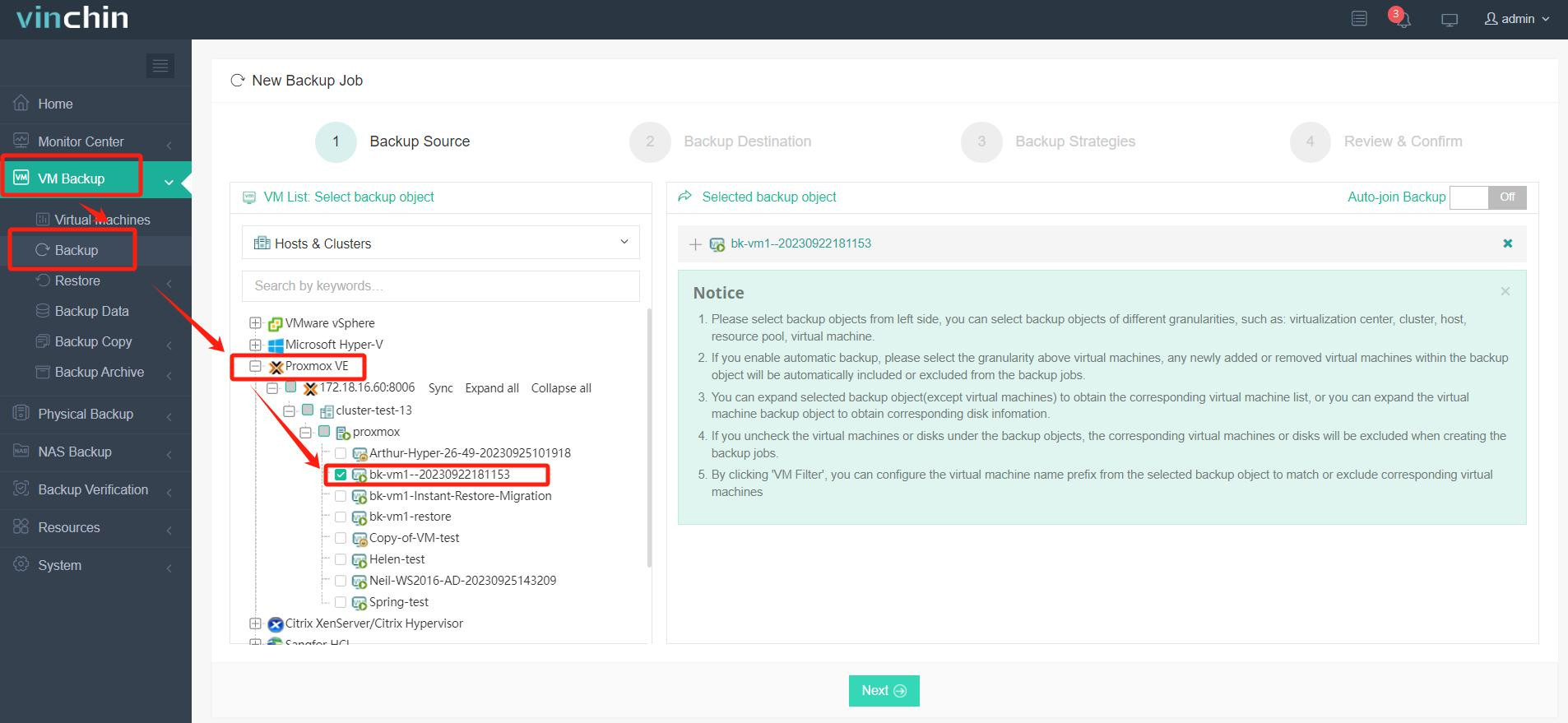

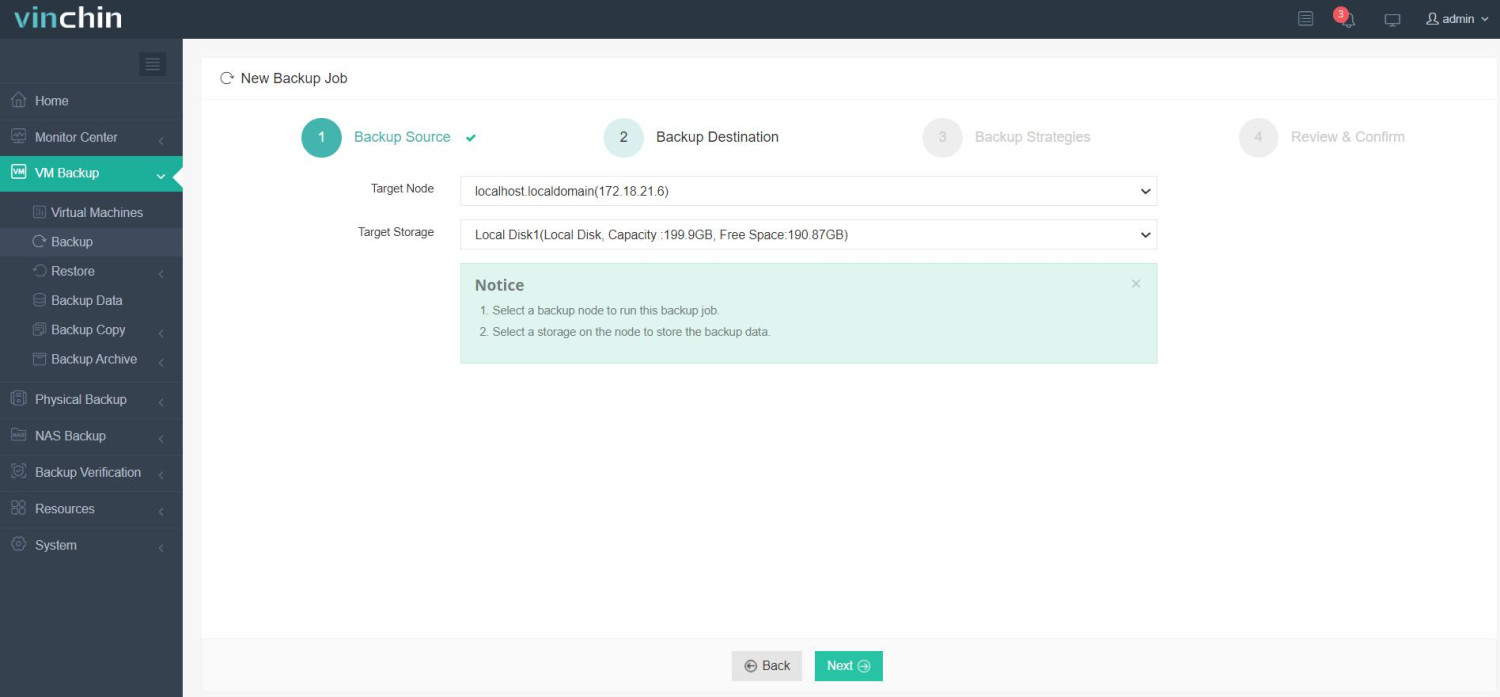

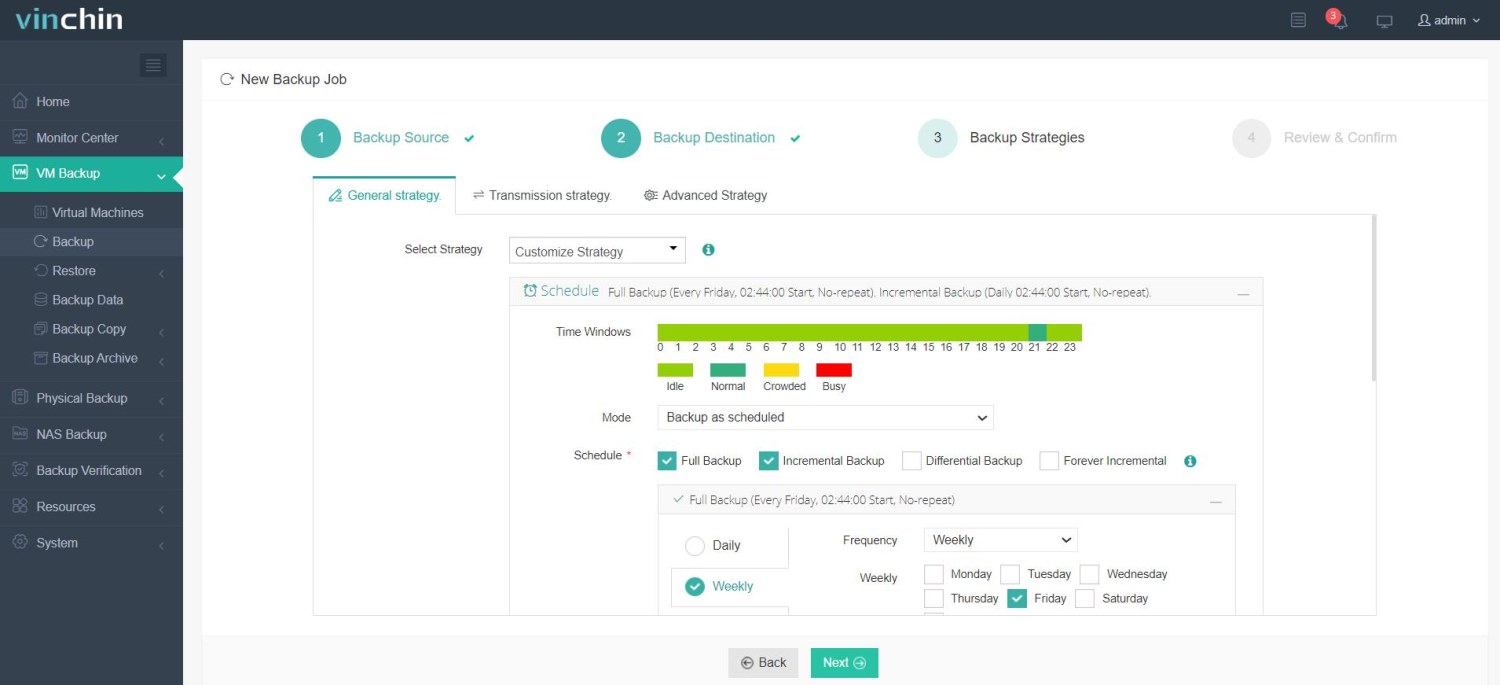

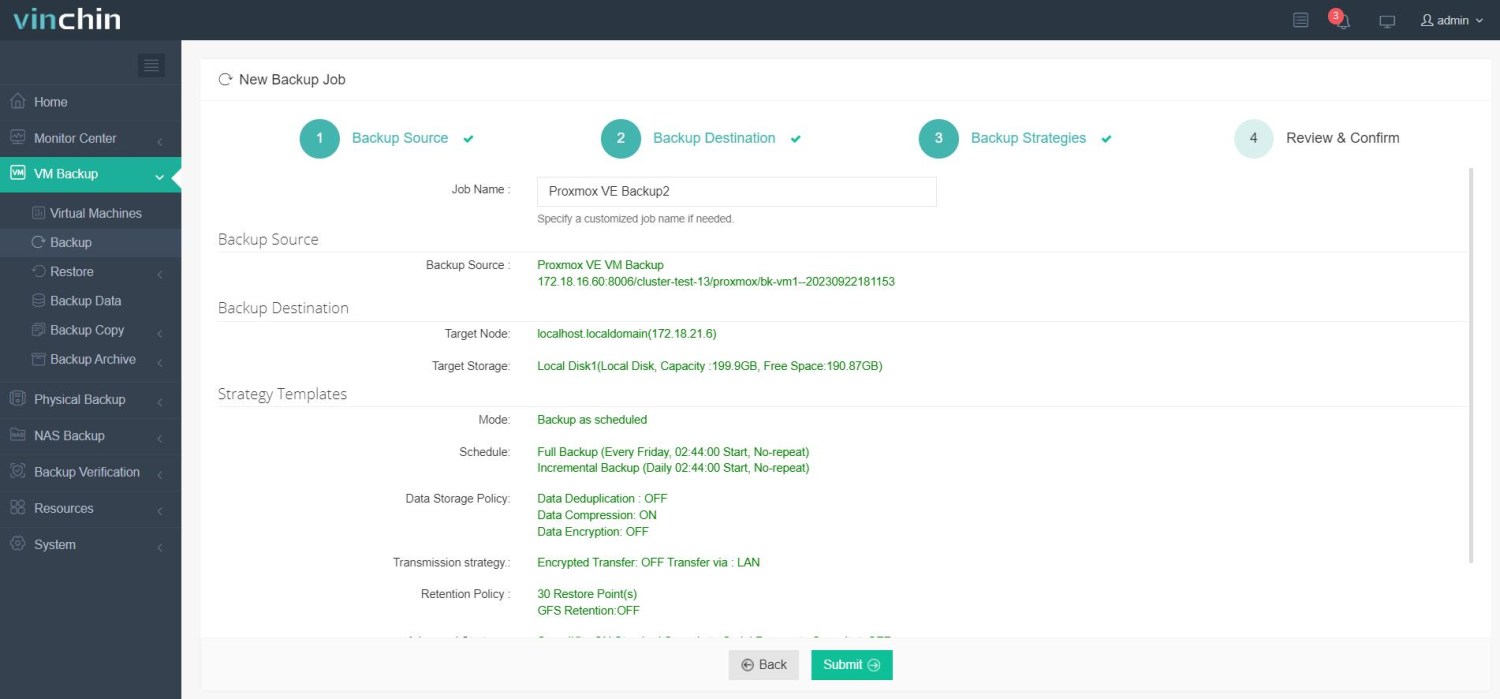

It only takes 4 steps for you to backup Proxmox VE VMs:

1. Select the backup object.

2. Select backup destination.

3. Configure backup strategies.

4. Review and submit the job.

Come on and start to use this powerful system with a 60-day full-featured trial! Also, contact us and leave your needs, and then you will receive a solution according to your IT environment.

Backup Ceph FAQs

1. Q: What is the downside of Ceph?

A: The downside of Ceph is its complexity in setup and management, which can be challenging for those with less experience. Additionally, Ceph’s performance can suffer under certain workloads if not properly tuned, and it demands continuous monitoring and maintenance to ensure smooth operation.

2. Q: What is the difference between Proxmox Ceph and ZFS?

A: Proxmox Ceph is a scalable, distributed storage system ideal for large, fault-tolerant deployments. ZFS is a robust file system and volume manager known for data integrity, snapshots, and compression, best for single-node setups.

Conclusion

Ceph backup and recovery is an indispensable part of enterprise data management. It can ensure the security and reliability of data and improve the stability and availability of business. In practical applications, we need to select appropriate backup and recovery strategies according to actual needs and situations, giving full play to the advantages of Ceph distributed storage system.

Share on: