-

What is Load Balancing?

-

What is the Role of Load Balancing?

-

How does Load Balancing work?

-

Difference between Hardware and Software Load Balancers

-

Load Balancing Algorithms

-

Types of Load Balancers

-

Efficient VM Backup with Vinchin Backup & Recovery

-

Virtual Machine Load Balancing FAQs

-

Conclusion

In today's explosive development of digital services, faced with exponentially growing network access demands, load balancing technology has become a core infrastructure for ensuring system availability and stability. As the central dispatching system in a distributed architecture, it intelligently allocates traffic, distributing user requests reasonably across multiple server nodes. This effectively resolves issues such as single-point performance bottlenecks and imbalanced resource utilization. The technology is continually evolving into a critical technical system supporting high concurrency and high availability in modern internet services.

What is Load Balancing?

Load Balance refers to balancing the load (work tasks or network requests) and distributing them across multiple operating units (servers or components). The goal is to distribute network traffic as evenly as possible across multiple servers to ensure the high availability of the entire business system.

In the early days of the internet, when the network was underdeveloped, traffic was relatively low, and services were simpler, a single server or instance might have sufficed to meet access demands. But today, with the advancement of the internet, traffic requests can easily reach tens or even hundreds of billions, and a single server or instance can no longer meet the requirements, hence the need for clusters. Whether for high availability or high performance, multiple machines are required to scale service capacity, and users should receive consistent responses regardless of which server they connect to.

On the other hand, the construction and scheduling of service clusters must remain sufficiently transparent to the user side—even if thousands or tens of machines respond to requests, this should not be the user's concern. Users only need to remember one domain name. The technical component responsible for scheduling multiple machines and providing services via a unified interface is known as the load balancer.

What is the Role of Load Balancing?

High concurrency: By using certain algorithm strategies to distribute traffic as evenly as possible to backend instances, it increases the concurrent processing capacity of the cluster.

Scalability: Depending on the network traffic volume, backend server instances can be increased or decreased, controlled by the load balancing device, providing scalability to the cluster.

High availability: The load balancer monitors candidate instances through algorithms or other performance data. When an instance is overloaded or abnormal, it reduces its traffic or skips it entirely, sending requests to other available instances, thus enabling high availability.

Security protection: Some load balancers offer security features such as blacklist/whitelist handling and firewalls.

How does Load Balancing work?

Load balancing can be implemented in several ways.

Hardware load balancers are physical appliances installed and maintained on-premises.

Software load balancers are applications installed on privately-owned servers or used as managed cloud services (cloud load balancing).

Load balancers work by mediating client requests in real time and determining which backend servers are best suited to handle them. To prevent any single server from being overloaded, the load balancer routes requests to any number of available servers, whether on-premises or hosted in server farms or cloud data centers.

Once a request is assigned, the selected server responds to the client via the load balancer. The load balancer completes the client-to-server connection by matching the client’s IP address with the server's IP address. The client and server can then communicate and perform the required tasks until the session ends.

If there is a traffic spike, the load balancer may spin up additional servers to meet demand. Conversely, if there’s a lull in traffic, it may reduce the number of available servers. It can also assist network caching by routing traffic to cache servers that temporarily store previous user requests.

Difference between Hardware and Software Load Balancers

▪️The most obvious difference is that hardware load balancers require proprietary rack-mounted devices, whereas software load balancers only need to be installed on standard x86 servers or virtual machines. Hardware network load balancers are typically over-provisioned—meaning they are sized to handle occasional traffic spikes. Additionally, each hardware device must be paired with another device for high availability in case of failure.

▪️Another key difference lies in scalability. As network traffic grows, data centers must provide enough load balancers to meet peak demands. For many enterprises, this means most load balancers remain idle until traffic peaks (e.g., on Black Friday).

▪️If traffic unexpectedly exceeds capacity, end-user experience is significantly impacted. Software load balancers, on the other hand, can scale elastically to meet demand. Whether traffic is low or high, software load balancers can automatically scale in real time, eliminating overprovisioning costs and concerns about unexpected spikes.

▪️Moreover, configuring hardware load balancers can be complex. Software load balancers, built on software-defined principles, span multiple data centers and hybrid/multi-cloud environments. In fact, hardware devices are incompatible with cloud environments, while software load balancers are compatible with bare metal, virtual, container, and cloud platforms.

Load Balancing Algorithms

Common load balancing algorithms are categorized into:

Static Load Balancing

Dynamic Load Balancing

Common Static Algorithms: Round Robin, Random, Source IP Hashing, Consistent Hashing, Weighted Round Robin, Weighted Random

Common Dynamic Algorithms: Least Connections, Fastest Response Time

☑️Random

Requests are randomly assigned to various nodes. According to probability theory, the more times clients call the server, the closer the distribution gets to being even, similar to round-robin results. However, random strategies may cause low-configured machines to crash, potentially triggering avalanches. Therefore, it's advisable to use equally configured backend servers when applying this method. Performance depends on the quality of the random algorithm.

☑️Round Robin

This algorithm sequentially distributes requests using the DNS in a continuous rotation. It’s the most basic load balancing method, relying only on server names to determine the next recipient of incoming requests.

☑️Weighted Round Robin

In addition to DNS names, each server is assigned a "weight" that determines its priority in handling incoming requests. Administrators assign weights based on server capacity and network needs.

☑️Weighted Random

Similar to Weighted Round Robin, this method assigns different weights based on server configuration and system load. The key difference is that it randomly chooses servers according to their weights, rather than sequentially.

☑️IP Hash

This method simplifies (or hashes) the incoming request’s IP address into a smaller value called a hash key. This unique hash key, representing the user's IP address, is then used to determine which server to route the request to.

☑️Least Connections

As the name suggests, when a new client request is received, this algorithm prioritizes servers with the fewest active connections. It helps prevent server overload and maintains consistent load distribution across servers.

☑️Least Response Time

This algorithm combines the Least Connections method with the shortest average server response time. It evaluates both the number of connections and the time it takes for servers to process and respond to requests. The server with the fewest active connections and fastest response receives the request.

☑️Source Hashing

Based on the source IP of the request, a hash is calculated, and the result is used to select a server from a list using a modulo operation. This method is often used for cache hits or requests in the same session. However, it has drawbacks—if a user (e.g., from black-market activity) generates excessive traffic, it may overload a backend server, causing service failures and access issues. Therefore, degradation strategies are also needed.

☑️Consistent Hashing

A specialized hashing algorithm that, when a server is added, only a portion of requests to old nodes is redirected to the new node, allowing smooth migration.

Types of Load Balancers

Although all types distribute traffic, specific types serve specific functions.

✔️Network Load Balancers

Optimize traffic and reduce latency in local and wide area networks. They use network information such as IP addresses and destination ports, along with TCP and UDP protocols, to route network traffic, providing sufficient throughput to meet user demand.

✔️Application Load Balancers

Use application-layer content such as URLs, SSL sessions, and HTTP headers to route API request traffic. Since multiple application-layer servers often have overlapping functions, checking this content helps determine which server can quickly and reliably fulfill specific requests.

✔️Virtual Load Balancers

With the rise of virtualization and VMware technologies, virtual load balancers are now used to optimize traffic between servers, virtual machines, and containers. Open-source container orchestration tools like Kubernetes provide virtual load balancing functions to route requests between container nodes within a cluster.

✔️Global Server Load Balancers

Route traffic to servers across multiple geographic locations to ensure application availability. User requests can be assigned to the nearest available server or, in case of failure, to another location with available servers. This failover capability makes global server load balancing a key part of disaster recovery.

Efficient VM Backup with Vinchin Backup & Recovery

While achieving efficient load balancing, enterprises also need reliable data protection solutions. Vinchin Backup & Recovery is such a solution that can provide comprehensive backup and recovery support for virtualized environments.

Vinchin Backup & Recovery is an advanced data protection solution that supports a wide range of popular virtualization platforms, including VMware, Hyper-V, XenServer, Red Hat Virtualization, Oracle, Proxmox, etc., and database, NAS, file server, Linux & Windows Server. It provides advanced features like agentless backup, forever incremental backup, V2V migration, instant restore, granular restore, backup encryption, compression, deduplication, and ransomware protection. These are critical factors in ensuring data security and optimizing storage resource utilization.

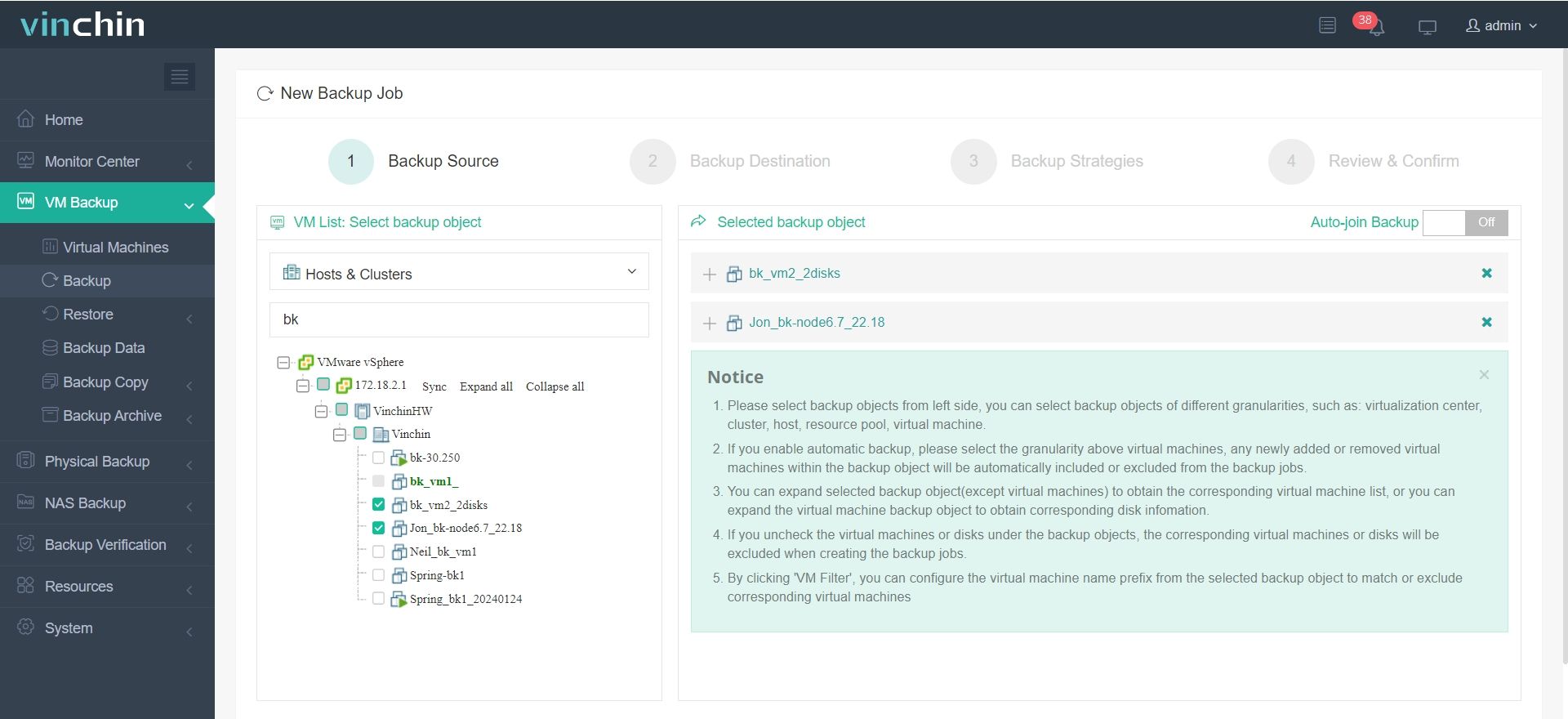

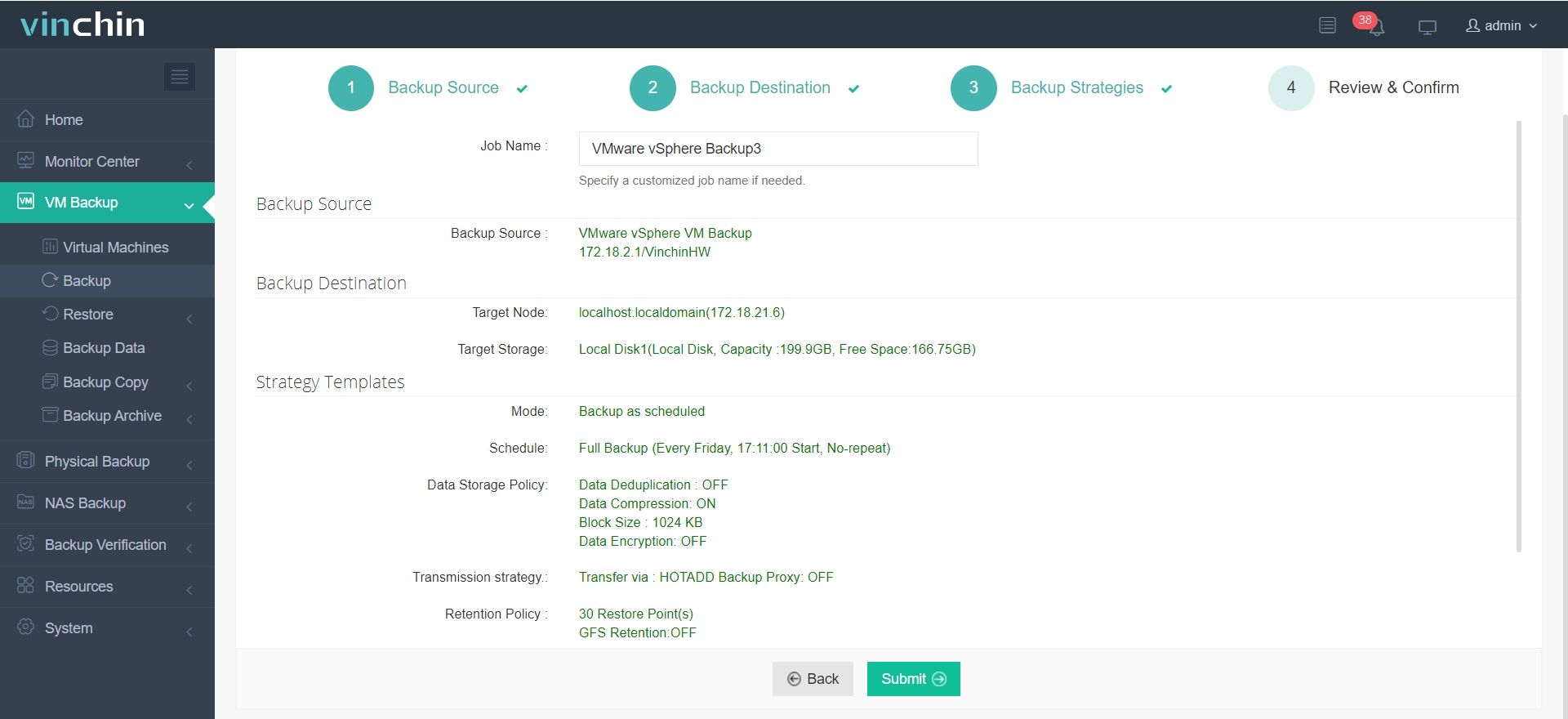

To backup VM with Vinchin Backup & Recovery, following these steps:

1. Choose the VM you want to backup

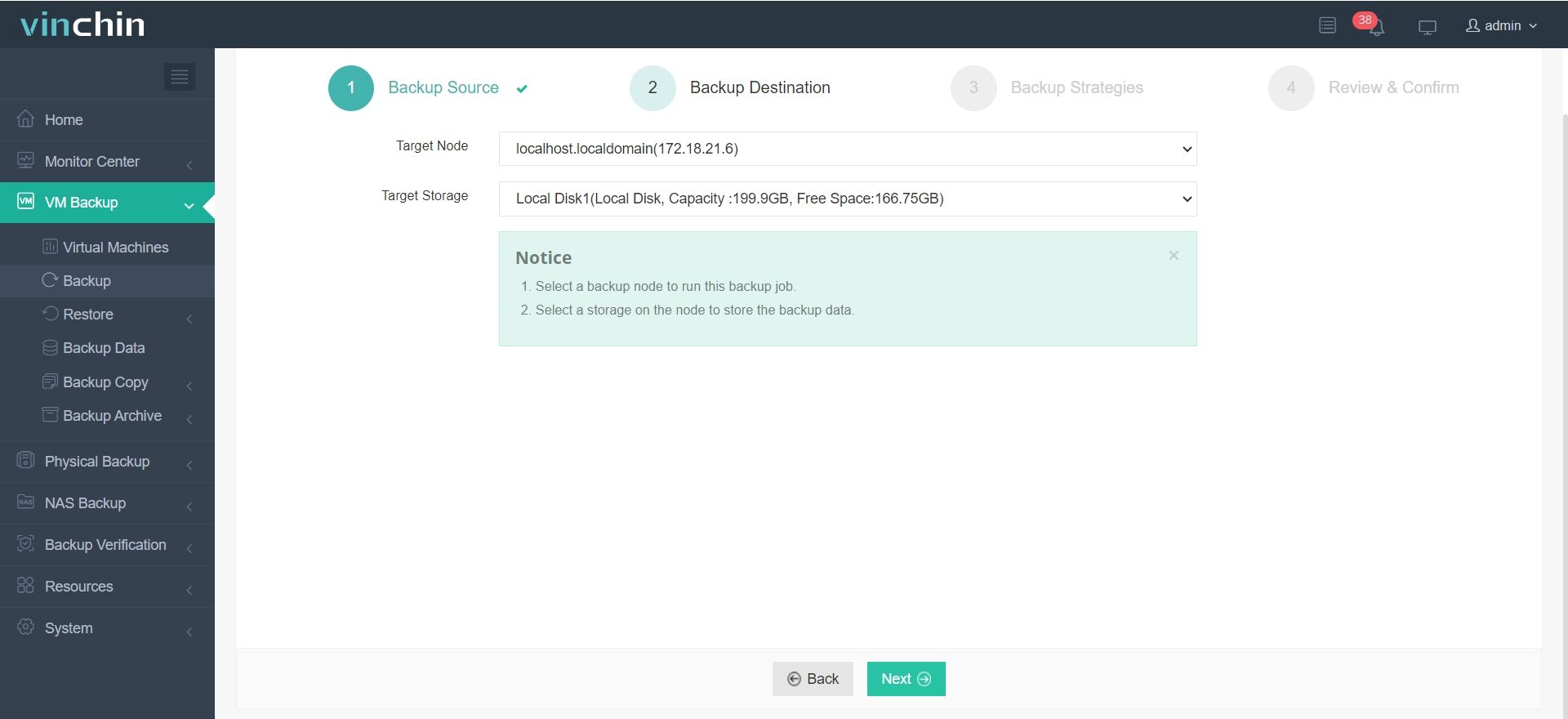

2. Specify backup destination

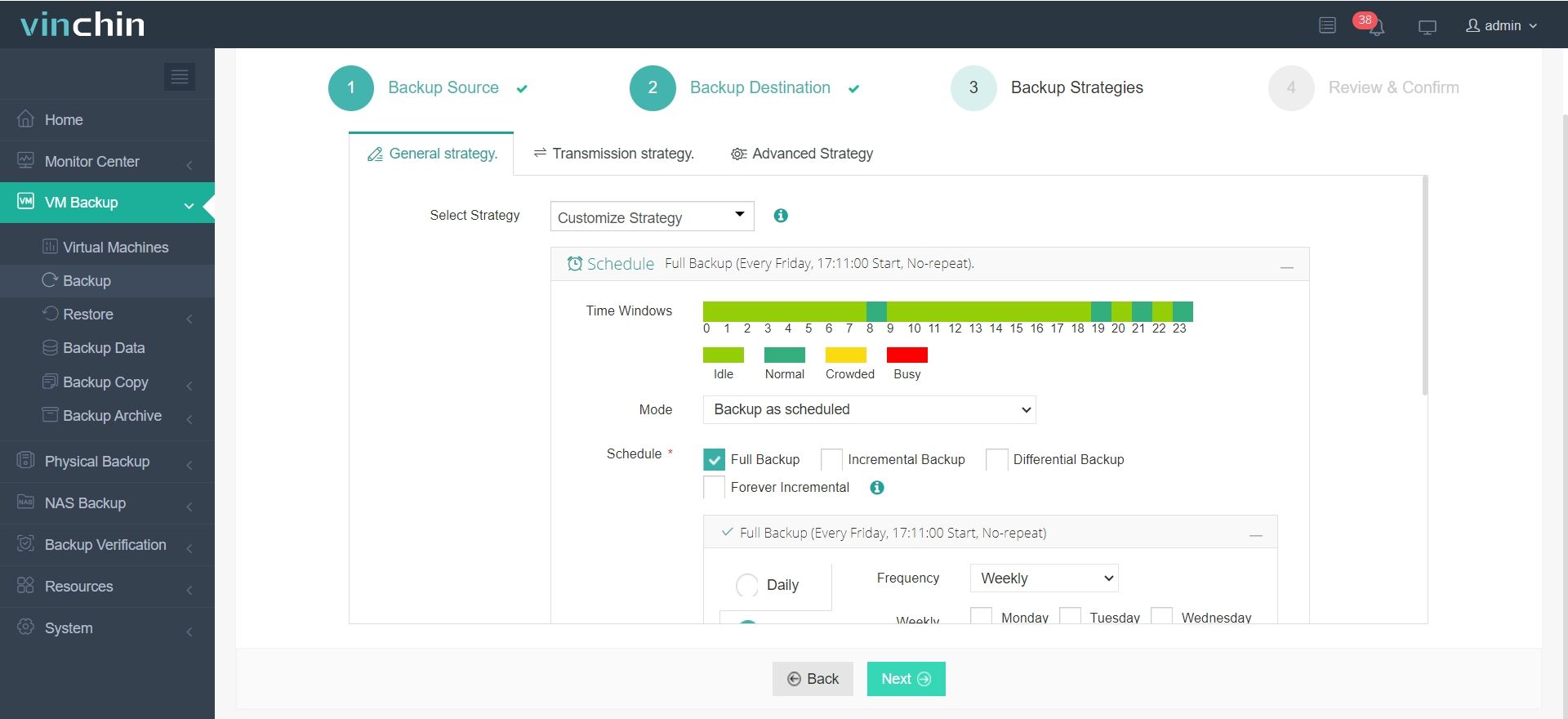

3. Configure backup strategies

4. Submit the backup job

Vinchin Backup & Recovery is trusted by thousands globally. Try it 60-day full-featured trial. Or share your specific needs, and you will receive a tailored solution for your IT infrastructure.

Virtual Machine Load Balancing FAQs

1. What's the difference between load balancing and high availability (HA)?

Load balancing distributes workloads efficiently across resources. High availability ensures that VMs are restarted on other hosts if the current host fails. They often work together to improve overall system resilience.

2. What are affinity and anti-affinity rules in load balancing?

Affinity rules keep specified VMs together on the same host. Anti-affinity rules ensure VMs are kept on different hosts (e.g., for redundancy). These rules guide the load balancer to respect workload relationships during migrations.

Conclusion

Load balancing doesn’t guarantee that network traffic is evenly distributed to backend service instances. Instead, its role is to ensure user experience even during unexpected events. Good architecture design and elastic scalability can significantly enhance the effectiveness of load balancing.

Share on: